-

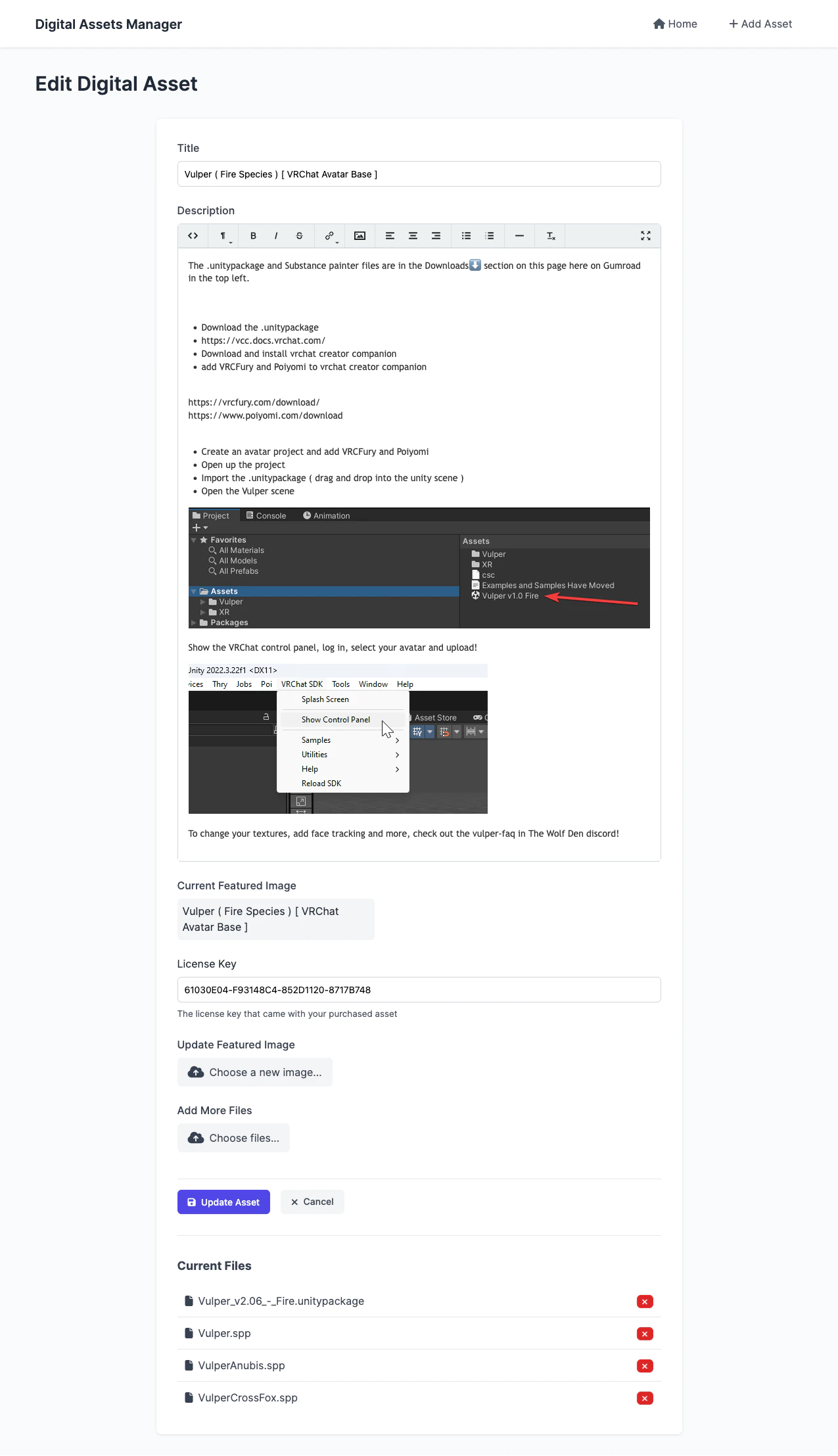

Attached Files

+

+

Files

{% if asset.files %}

diff --git a/templates/base.html b/templates/base.html

index 562a66f..9695792 100644

--- a/templates/base.html

+++ b/templates/base.html

@@ -27,11 +27,47 @@

crossorigin="anonymous"

referrerpolicy="no-referrer"

/>

+

{% block head %}{% endblock %}

+

+

+  +

+  +

+  +

+